In IT, the concept of the “cluster” plays a central role when it comes to performance, reliability and scalability.

The term is used all the time in project meetings and technical discussions, but it is often not immediately clear what exactly is technically behind it and when the effort is really worth it.

This article sheds light on the matter. We clarify the basic definition, shed light on the different types of clusters and show you which requirements and best practices you need to consider when setting up and operating them.

Basic definition: What is a cluster?

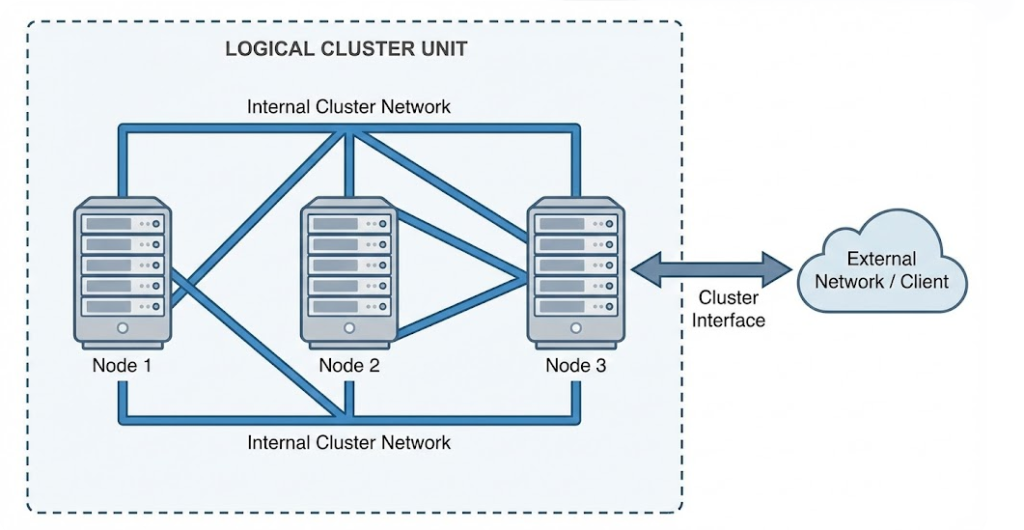

An IT cluster is basically a network of several computers (often referred to as “nodes”) that are networked together to perform a task together.

The motivation behind this is manifold: You want to achieve higher availability, scale better or simply bundle more computing power. The ultimate goal is always to ensure the operation of services or to keep performance stable under load.

It is of secondary importance whether the cluster consists of physical hardware (bare metal) or includes virtual machines (VMs) or containers. It is crucial that the cluster nodes communicate intelligently with each other. They exchange data, compare states (“heartbeat”) and support each other in the distribution of resources.

Why do you rely on clustering?

A central argument for clustering is almost always high availability (HA). If a server fails – whether due to a hardware defect or a software crash – another member of the cluster seamlessly takes over its tasks. Ideally, your end users won’t even notice the outage.

But that’s not the only reason. Clusters also help you handle massive amounts of data or intelligently control requests. Here are the top four reasons to use it:

- High Availability (HA): You avoid single points of failure (SPOF) and drastically minimize unplanned downtime.

- Load Balancing: Workloads are distributed over several shoulders, which keeps performance stable for the individual user.

- Higher computing power (HPC): You combine the resources of multiple nodes to solve complex tasks (e.g. big data analysis or renderings) that a single machine would never be able to do.

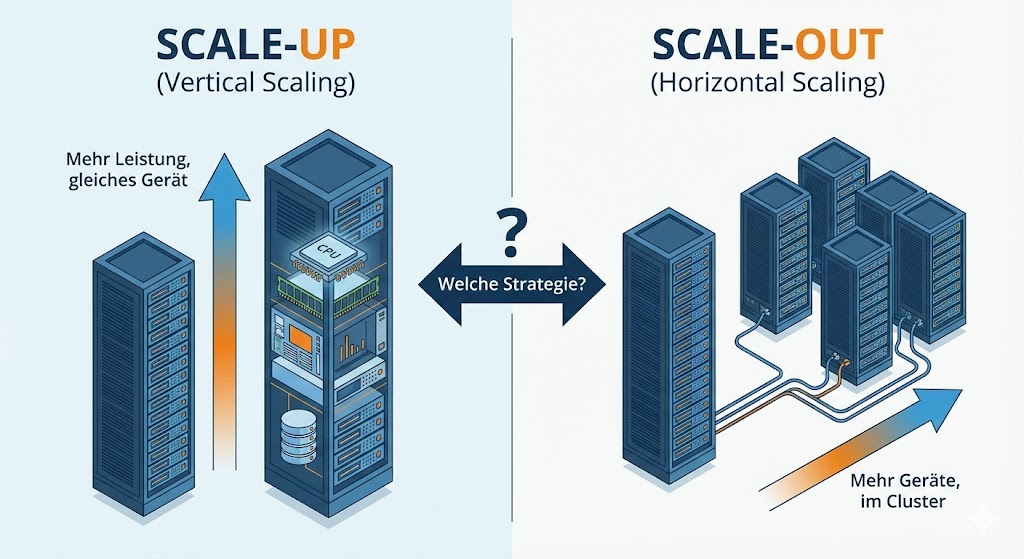

- Scalability: Is your ministry growing? Simply add more nodes instead of having to swap the entire server for a larger one (scale-out vs. scale-up).

Cluster types at a glance

Not every cluster has the same goal. Depending on whether you want to prevent failures, distribute loads or need pure computing power, there are essentially three main categories.

1. High Availability Cluster (HA Cluster)

The name says it all: The focus is on uptime. Typical candidates for HA clusters are databases, file servers or business-critical ERP systems. If the service fails here even for a few minutes, it costs money and nerves.

- How it works: Several servers (nodes) run in a network and monitor each other via “heartbeat”. If the primary node fails, the secondary node notices this and automatically takes over the services and resources (IP addresses, storage). This process is called failover.

- Examples: Microsoft Failover Cluster, Pacemaker/Corosync on Linux.

2. Load Balancing Cluster

This cluster type is the “bouncer” of your infrastructure. It distributes incoming requests from users to several backend servers. This prevents a single server from collapsing under the load and ensures consistent response times.

- How it works: A load balancer (hardware or software such as HAProxy/Nginx) is located in front of the actual servers. It accepts the request and forwards it based on configured algorithms. Common methods are:

- Round Robin: Each server is served in turn.

- Least Connections: The server with the fewest active connections wins the contract.

- Application: Classic for web servers, terminal server farms or Kubernetes ingress controllers.

3. HPC (High Performance Computing) Cluster

If a single supercomputer is too expensive or not physically feasible, you build an HPC cluster. This is about maximum computing power through parallelization.

- How it works: A large task is broken down into many small subpackages. These are distributed to the nodes, where they are simultaneously calculated and reassembled at the end. Special middleware such as MPI (Message Passing Interface) is often used for this purpose.

- Areas of application: Scientific simulations (weather, physics), rendering farms for media or training AI models.

Cluster Basics: Components and Prerequisites

No matter which type of cluster you choose, a cluster is more than just software. The underlying infrastructure must be right, otherwise you will only build a complex source of error instead of a high-availability solution. You need to pay attention to these four pillars:

1. Network infrastructure (The Heartbeat)

Communication between nodes is vital. If the servers no longer see each other due to network latency or outages, there is a risk of a split-brain scenario (both nodes think they are the boss and write to the data at the same time – the worst-case scenario).

- Best Practice: Use dedicated network cards or VLANs purely for the cluster heartbeat. In the HPC sector, high-speed connections such as InfiniBand are also mandatory to avoid bottlenecks in data transmission.

2. Shared Storage and Data Consistency

In an HA cluster, all nodes must be able to access the same database. When node A dies, node B must be able to pick up where A left off.

- Solutions: SAN (Storage Area Network) via Fibre Channel or iSCSI are usually used. Alternatively, there is software-defined storage (such as Ceph or GlusterFS) or block-based replication (DRBD), which mirror data in real time.

3. Redundant power supply

A cluster protects you from server failures, but not from rack power outages. If all nodes are connected to the same power strip, the best cluster manager won’t help you.

- Duty: Redundant power supplies in the servers that are connected to separate circuits and ideally to different UPS (Uninterruptible Power Supply) systems.

4. Fencing and quorum

Even if it goes deep technically, you need a mechanism that decides what happens when a node “goes crazy”.

- Fencing (STONITH): “Shoot The Other Node In The Head”. The system must be able to hard shut down a faulty node (e.g. via IPMI/management interface) to prevent data corruption.

- Quorum: The majority decides. A cluster needs to know how many nodes are needed to be considered quorum.

Areas of application and typical scenarios

Theory is good, but where do you encounter clusters in real admin life? The areas of application depend heavily on the requirements of your company or your customers.

High availability: The 24/7 claim

In industries such as e-commerce, financial services, or healthcare, downtime is not an option.

- Scenario: An SQL Server cluster for the ERP system.

- The advantage: Not only hardware defects are intercepted. The often underestimated advantage is maintainability. You can take Node A offline for Windows updates or firmware patches while Node B keeps operating. Users continue to work as if nothing had happened.

Load balancing: When traffic explodes

Web applications and SaaS solutions need to be elastic. A single web server quickly reaches its limits during marketing campaigns (e.g. Black Friday).

- Scenario: A front-end web cluster behind an NGINX load balancer.

- The advantage: Scalability. If the load increases, you simply start up more web server nodes and add them to the balancer pool.

High Performance Computing: Research & Analysis

This is not about web traffic, but about raw computing power for simulations (crash tests, climate models) or big data.

- Scenario: A compute cluster that processes huge datasets for machine learning.

- The advantage: Results that would take weeks on a single workstation are available in hours.

Challenges and best practices

Building a cluster is one thing – operating it stably is another. The complexity increases with each node. To ensure that your cluster does not become a problem child, you should follow these best practices:

1. Planning is everything (Design for Failure)

Always assume the worst case. What happens if the storage network breaks down right now ?

- Plan redundancies consistently (network, power, storage paths).

- Consider security considerations such as firewall rules between nodes and encryption of cluster traffic from the start.

2. Testing, testing, testing (chaos engineering)

A cluster whose failover has never been tested is not a cluster, but a hope.

- Use a staging environment that matches production.

- Literally pull the plug (or disable vNICs) to see if the fencing works and the services really swivel cleanly.

3. Monitoring and alerting

Flying blind is deadly. You need to know how the nodes are doing before they go down.

- Rely on tools like Prometheus, Checkmk or Nagios.

- Monitor not only host up/down, but also metrics such as replication lag, heartbeat latencies, and I/O latency.

- Configure alerts to warn you before the disk is full or RAM overflows.

4. Automation (Infrastructure as Code)

Manual configurations lead to “configuration drift” – Node A is suddenly configured differently than Node B.

- Use tools like Ansible, Terraform , or Puppet to keep the cluster configuration consistent and reproducible. This is essential for survival, especially in large environments.

Outlook and conclusion

Cluster technologies are the backbone of modern IT. They dissolve the physical limit of individual machines and enable systems that (almost) never sleep and can grow at will.

The basic idea is simple: teamwork beats lone wolves. But practice requires know-how. If you want to operate clusters successfully, you have to understand that hardware and software, network and storage must enter into a symbiosis.

Where is the journey going?

The future is hybrid. We are increasingly seeing “stretched clusters” that span on-premises data centers and the cloud. Technologies like Kubernetes further abstract the classic cluster, so you can worry less about the individual server and more about the application. But whether on-premises or in the cloud: The principles of redundancy, quorum and load balancing remain the basis that you as an admin must master.